Introduction

Welcome to the OpenPRoT documentation!

This documentation provides comprehensive information about the OpenPRoT project, including user guides, developer documentation, and API references.

What is OpenPRoT?

OpenPRoT is an open-source, Rust-based project designed to provide a secure and reliable foundation for platform security. It offers a flexible and extensible framework for developing firmware and security-related applications for a variety of platforms.

At its core, OpenPRoT provides a Hardware Abstraction Layer (HAL) that enables platform-agnostic application development. On top of this HAL, it offers a suite of services and protocols for platform security, including device attestation, secure firmware updates, and more. The project is designed with a strong emphasis on modern security protocols and standards, such as:

- SPDM (Security Protocol and Data Model): For secure communication and attestation.

- MCTP (Management Component Transport Protocol): As a transport for management and security protocols.

- PLDM (Platform Level Data Model): For modeling platform components and their interactions.

Project Goals

The primary goals of the OpenPRoT project are to:

- Promote Security: Provide a robust and secure foundation for platform firmware, leveraging modern, industry-standard security protocols.

- Ensure Reliability: Offer a high-quality, well-tested, and reliable codebase for critical platform services.

- Enable Extensibility: Design a modular and extensible architecture that can be easily adapted to different hardware platforms and use cases.

- Foster Collaboration: Build an open and collaborative community around platform security.

Documentation Overview

This documentation is structured to help you understand and use OpenPRoT effectively. Here’s a guide to the different sections:

- Getting Started: A hands-on guide to setting up your development environment and building your first OpenPRoT application.

- Specification: The OpenPRoT specification.

- Repo Structure: A high-level overview of the OpenPRoT repository structure, including its major components and their interactions.

- Usage: Detailed instructions on how to use the OpenPRoT framework and its various services.

- Design: In-depth design documents and specifications for the various components of OpenPRoT.

- Contributing: Guidelines for contributing to the OpenPRoT project.

Repositories

- https://github.com/OpenPRoT/openprot: Main OpenPRoT repository.

- https://github.com/OpenPRoT/mctp-rs: MCTP protocol support for Linux and embedded platforms.

Contact Us

- Email us at openprot-wg@lists.chipsalliance.org

- Join our public mailing list at https://lists.chipsalliance.org/g/openprot-wg

Quick Start

To get started with OpenPRoT, you can build and test the project using the following commands:

cargo xtask build

cargo xtask test

For more detailed instructions, please refer to the Getting Started guide.

Governance

The OpenPRoT Technical Charter can be found at here.

Security Policy

Please refer to the Security Policy for more information.

License

Unless otherwise noted, everything in this repository is covered by the Apache License, Version 2.0.

Getting Started

Prerequisites

- Rust 1.70 or later

- Cargo

Installation

Clone the repository:

git clone <repository-url>

cd openprot

Build the project:

cargo xtask build

Run tests:

cargo xtask test

Next Steps

- Read the Usage guide

- Check out the Architecture documentation

- Learn about Contributing

Usage

Available Commands

The project uses xtask for automation. Here are the available commands:

Build Commands

cargo xtask build # Build the project

cargo xtask check # Run cargo check

cargo xtask clippy # Run clippy lints

Test Commands

cargo xtask test # Run all tests

Formatting Commands

cargo xtask fmt # Format code

cargo xtask fmt --check # Check formatting

Distribution Commands

cargo xtask dist # Create distribution

Documentation Commands

cargo xtask docs # Build documentation

Utility Commands

cargo xtask clean # Clean build artifacts

cargo xtask cargo-lock # Manage Cargo.lock

OpenPRoT Specification

Version: v0.5 - Work in Progress

Introduction

The concept of a Platform Root of Trust (PRoT) is central to establishing a secure computing environment. A PRoT is a trusted component within a system that serves as the foundation for all security operations. It is responsible for ensuring that the system boots securely, verifying the integrity of the firmware and software, and performing critical cryptographic functions. By acting as a trust anchor, the PRoT provides a secure starting point from which the rest of the system's security measures can be built. This is particularly important in an era where cyber threats are becoming increasingly sophisticated, targeting the lower layers of the computing stack, such as firmware, to gain persistent access to systems.

OpenPRoT is a project intended to enhance the security and transparency of PRoTs by defining and building an open source firmware stack that can be run on a variety of hardware implementations. Open source firmware offers several benefits that can enhance the effectiveness and trustworthiness of a PRoT. Firstly, open source firmware allows for greater transparency, as the source code is publicly available for review and audit. This transparency helps identify and mitigate vulnerabilities more quickly, as a global community of developers and security experts can scrutinize the code. It also reduces the risk of hidden backdoors or malicious code, which can be a concern with proprietary firmware.

Moreover, an open source firmware stack can foster innovation and collaboration within the industry. By providing a common platform that is accessible to all, developers can contribute improvements, share best practices, and develop new security features that benefit the entire ecosystem. This collaborative approach can lead to more robust and resilient firmware solutions, as it leverages the collective expertise of a diverse community. Additionally, open source firmware can enhance interoperability and reduce vendor lock-in, giving organizations more flexibility in choosing hardware and software components that best meet their security needs.

Incorporating an open source firmware stack into a PRoT not only strengthens the security posture of a system but also aligns with broader industry trends towards openness and collaboration. As organizations increasingly recognize the importance of securing the foundational layers of their computing environments, the combination of a PRoT with open source firmware represents a powerful strategy for building trust and resilience in the face of evolving cyber threats.

Background

Today's Platform Root of Trust (PRoT) solutions are often specifically designed for their target platform, relying on custom interfaces or protocols. This leads to slower time-to-market due to the integration effort required. Customers can become locked into a single solution, making it costly and time-consuming to change suppliers, which in turn introduces supply chain risks.

The Open Platform Root-of-Trust (OpenPRoT) stack is an open and extensible standards-compliant root-of-trust firmware stack for use in root-of-trust elements. The project was initiated to create an OCP specification for a Platform Root of Trust software stack, along with an open-source implementation of that specification. The OpenPRoT stack provides base root-of-trust services in an open architecture that allows hardware vendors to provide both standard and value-added services.

Goals

The vision of the OpenPRoT project is to enable implementation consistency, transparency, openness, reusability, and interoperability. The primary goals of the project are to:

- Create an OCP specification for a Platform Root of Trust (PRoT) firmware stack.

- Create an open-source implementation of the specification.

- Target new and existing PRoT hardware implementations, with a preference for Open Silicon.

- Enable optionality for integrators through stable interfaces while maintaining a high security bar.

- Standardize PRoT hardware interfaces, such as an add-in card connector on the DC-SCM board.

- Promote reusability through collaboration with standards bodies and hardware RoT projects to create robust, modular firmware.

Industry standards and specifications

OpenPRoT is designed to be a standards-based and interoperable Platform Root of Trust (PRoT) solution. This ensures that OpenPRoT can be integrated into a wide range of platforms and that it leverages proven and well-defined security and management protocols.

Distributed Management Task Force (DMTF)

- DSP0274: Security Protocol and Data Model (SPDM) Version 1.3 or later

- DSP0277: Secured Messages using SPDM over MCTP Binding

- DSP0236: Management Component Transport Protocol (MCTP) Base Specification

- DSP0240: Platform Level Data Model (PLDM) Base Specification

- DSP0248: Platform Level Data Model (PLDM) for Platform Monitoring and Control Specification

- DSP0267: Platform Level Data Model (PLDM) for Firmware Update Specification

Trusted Computing Group (TCG)

- DICE Layering Architecture: Device Identity Composition Engine

- DICE Attestation Architecture: Certificate-based attestation

- DICE Protection Environment (DPE): Runtime attestation service

- TCG DICE Concise Evidence Binding for SPDM: Evidence format specification

National Institute of Standards and Technology (NIST)

- NIST SP 800-193: Platform Firmware Resiliency Guidelines

- NIST FIPS 186-5: Digital Signature Standard (DSS)

- NIST SP 800-90A: Recommendation for Random Number Generation

- NIST SP 800-108: Recommendation for Key Derivation Functions

High Level Architecture

The OpenPRoT architecture is designed to be a flexible and extensible platform Root of Trust (PRoT) solution. It is built upon a layered approach that abstracts hardware-specific implementations, providing standardized interfaces for higher-level applications. This architecture promotes reusability, interoperability, and a consistent security posture across different platforms.

Block Diagram

The following block diagram illustrates the high-level architecture of OpenPRoT.

Architectural Layers

The OpenPRoT architecture can be broken down into the following layers:

- Hardware Abstraction Layer (HAL): At the lowest level, the Driver Development Kit (DDK) provides hardware abstractions. This layer is responsible for interfacing with the specific RoT silicon and platform hardware.

- Operating System: Above the DDK sits the operating system, which provides the foundational services for the upper layers.

- Middleware: This layer consists of standardized communication protocols

that enable secure and reliable communication between different components

of the system. Key protocols include:

- MCTP (Management Component Transport Protocol): Provides a transport layer that is compatible with various hardware interfaces.

- SPDM (Security Protocol and Data Model): Used for establishing secure channels and for attestation.

- PLDM (Platform Level Data Model): Provides interfaces for firmware updates and telemetry retrieval.

- Services: This layer provides a minimal set of standardized services

that align with the OpenPRoT specification. These services include:

- Lifecycle Services: Manages the lifecycle state of the device, including secure debug enablement.

- Attestation: Aggregates attestation reports from platform components.

- Firmware Update & Recovery: Orchestrates the secure update and recovery of firmware for platform components.

- Telemetry: Collects and extracts telemetry data.

- Applications: At the highest level are the applications that implement

the core logic of the PRoT. These applications have room for differentiation

while being built upon standardized interfaces. Key applications include:

- Secure Boot: Orchestrates the secure boot process for platform components.

- Policy Manager: Manages the security policies of the platform.

Use Cases

Use cases are divided into two general groups:

- Legacy Admissible Architectures: Where the upstream device, that is, the device attached to the Root of Trust (RoT), boots from SPI NOR Flash EEPROM.

- Modern Admissible Architectures: Where the upstream device supports flash-less boot (i.e., stream boot). Support for modern architectures will be added in a future version of this specification.

Legacy Admissible Architectures

There are three legacy admissible architectures supported by the OpenPRoT implementation:

| Architecture | Common Usages | SPI NOR Flash EEPROM |

|---|---|---|

| Dual-Flash Side-by-side | (Preferred) Most integrations | Two parts, one partition per part (i.e., A + B). |

| Direct-Connect | Add-in devices; board-constrained | One part, double-sized to support A/B updates. |

| TPM | TPM Daughtercard, Onboard TPM | None |

Direct-Connect

In the Direct-Connect architecture, OpenPRoT acts as an SPI interposer between the upstream device and the SPI NOR Flash EEPROM. OpenPRoT examines the opcode of each transaction and decides whether the operation should pass through to the backing SPI Flash or be intercepted and handled by its hardware filtering logic. This policy is controlled by both hardware and firmware. Typically:

- Control operations are handled by the RoT hardware directly.

- Read operations are passed through to the backing SPI Flash.

- Write operations are intercepted by OpenPRoT firmware and may be replayed to the backing SPI flash based on security policy.

OpenPRoT supports atomic A/B updates by diverting read operations to either the lower or upper half of the SPI Flash while hiding the opposing half. This requires the SPI Flash to be double the size needed by the upstream device (e.g., a 512Mbit Flash for a 256Mbit requirement).

Note: Direct-Connect has a hardware limitation on the maximum supported SPI frequency due to board capacitance, layout, and the PRoT silicon. The integration designer must verify that the expected SPI frequency range is compatible with the upstream device. If this architecture is infeasible, consider the Dual-Flash Side-by-Side architecture instead.

Dual-Flash Side-by-Side

In the Dual-Flash Side-by-Side architecture, the upstream device has direct access to one of two physical SPI NOR Flash EEPROM chips, selected by an OpenPRoT-controlled mux. This arrangement supports atomic A/B updates. OpenPRoT can also directly access each flash chip. The layout should use the smallest possible nets to avoid signal integrity issues from stubs.

Both SPI Flash chips must be the same part. The OpenPRoT firmware coordinates the mux control and its two SPI host interfaces to ensure the nets are not double-driven.

Note: If using quad-SPI, the flash requires a password protection feature, as the Write Protect (WP) pin is not available.

TPM

The TPM Admissible Architecture is designed for a direct connection to a standard TPM-SPI interface. In this configuration, OpenPRoT can be soldered onto the main board or placed on a separate daughtercard.

TPM integrations do not require a backing SPI NOR Flash EEPROM and generally only support single-mode SPI. Where possible, these integrations should adhere to a standard TPM connector design.

Threat Model

Assets

- Integrity and authenticity of OpenPRoT firmware

- Integrity and authorization of cryptographic operations

- Integrity of anti-rollback counters

- Integrity and confidentiality of symmetric keys managed by OpenPRoT

- Integrity and confidentiality of private asymmetric keys

- Integrity of boot measurements

- Integrity and authenticity of firmware update payloads

- Integrity and authenticity of OpenPRoT policies

Attacker Profile

The attack profile definition is based on the JIL Application of Attack Potential to Smartcards and Similar Devices Specification version 3.2.1.

- Type of access: physical, remote

- Attacker Proficiency Levels: expert, proficient, laymen

- Knowledge of the TOE: public (open source), critical for signing keys

- Equipment: none, standard, specialized, bespoke

Attacks within Scope

See the JIL specification for examples of attacks.

- Physical attacks

- Perturbation attacks

- Side-channel attacks

- Exploitation of test features

- Attacks on RNG

- Software attacks

- Application isolation

Threat Modeling

To provide a transparent view of the security posture for a given OpenPRoT + hardware implementation, integrators are required to perform a threat modeling analysis. This analysis must evaluate the specific implementation against the assets and attacker profile defined in this document.

The results of this analysis must be documented in table format, with the following columns:

- Threat ID: Unique identifier which can be referenced in documentation and security audits

- Threat Description: Definition of the attack profile and potential attack.

- Target Assets: List of impacted assets

- Mitigation(s): List of countermeasures implemented in hardware and/or software to mitigate the potential attack

- Verification: Results of verification plan used to gain confidence in the mitigation strategy.

Integrators should use the JIL specification as a guideline to identify relevant attacks and must detail the specific mitigation strategies implemented in their design. The table must be populated for the target hardware implementation to allow for a comprehensive security review.

Firmware Resiliency

FW Resiliency Firmware resiliency is a critical concept in modern cybersecurity, particularly as outlined in the NIST SP 800-193 specification. As computing devices become more integral to both personal and organizational operations, the security of their underlying firmware has become paramount. Firmware is often a target for sophisticated cyberattacks because it operates below the operating system, making it a potential vector for persistent threats that can evade traditional security measures. NIST SP 800-193 addresses these concerns by providing a comprehensive framework for enhancing the security and resiliency of platform firmware, ensuring that systems can withstand, detect, and recover from attacks.

The NIST SP 800-193 guidelines focus on three main pillars: protection, detection, and recovery. Protection involves implementing measures to prevent unauthorized modifications to firmware, such as using cryptographic techniques to authenticate updates. Detection is about ensuring that any unauthorized changes to the firmware are quickly identified, which can be achieved through integrity checks and monitoring mechanisms. Recovery is the ability to restore firmware to a known good state after an attack or corruption, ensuring that the system can continue to operate securely. By addressing these areas, the guidelines aim to create a robust defense against firmware-level threats, which are increasingly being exploited by attackers seeking to gain deep access to systems.

In the context of NIST SP 800-193, firmware resiliency is not just about preventing attacks but also about ensuring continuity and trust in the system. The specification recognizes that while it is impossible to eliminate all risks, having a resilient firmware infrastructure can significantly mitigate the impact of potential breaches. This approach is particularly important for critical infrastructure and enterprise environments, where the integrity and availability of systems are crucial. By adopting the principles of NIST SP 800-193, we can enhance our security posture, protect sensitive data, and maintain operational stability in the face of evolving cyber threats.

PRoT Resiliency

TBD

Connected Device Resiliency

TBD

Middleware

OpenPRoT middleware consists of support libraries necessary to implement Root of Trust functionality, telemetry, and firmware management. Support for DMTF protocols such as MCTP, SPDM, and PLDM are provided.

MCTP

MCTP OpenPRoT devices shall support MCTP as the transport for all DMTF protocols.

Versions

The minimum required MCTP version is 1.3.1 (DSP0236.) Support for MCTP 2.0.0 (DSP0256) may be introduced in a future version of this spec.

Required Bindings

Currently only one binding is mandatory in the OpenPRoT specification, though this will change in future versions.

- MCTP over SMBus (DSP0237, 1.2.0)

Recommended Bindings

- MCTP over I3C (DSP0233, 1.0.1)

- MCTP over PCIe-VDM (DSP0238, 1.2.1)

- Only on platforms utilizing PCIe 6 and up.

- MCTP over USB (DSP0283, 1.0.0)

Required Commands

- Set Endpoint ID

- Get Endpoint ID

- Get MCTP Version Support

- Get Message Type Support

- Get Vendor Defined Message Support

- All commands in the range 0xF0 - 0xFF

Optional Commands

- All other commands are optional, but may become required in future revisions.

Development TCP Binding

- OpenPRoT will provide a TCP binding for developmental purposes.

SPDM

SPDM OpenPRoT devices shall use SPDM to conduct all attestation operations both with downstream devices (as a requester) and upstream devices (as a responder.) Devices may choose to act as a requester, a responder, or both. All SPDM version references assume alignment with the most recently released versions of the spec.

OCP Attestation Spec 1.1 Alignment

OpenPRoT implementations of SPDM must align with the OCP Attestation Spec 1.1. All following sections have taken this spec into account. Please refer to that specification for details on specific requirements.

Baseline Version

OpenPRoT sets a baseline version of SPDM 1.2.

Requesters

OpenPRoT devices implementing an SPDM requester will implement support for SPDM 1.2 minimum and may implement SPDM 1.3 and up. The minimum and maximum supported SPDM versions can be changed if support for other versions is not necessary.

Responders

OpenPRoT devices implementing an SPDM responder must implement support for SPDM

1.2 or higher. Responders may only report (via GET_VERSION) a single supported

version of SPDM.

Required Commands

All requesters and responders shall implement the four (4) spec mandatory SPDM commands:

GET_VERSIONGET_CAPABILITIESNEGOTIATE_ALGORITHMSRESPOND_IF_READY

All requesters and responders shall implement the following spec optional commands:

GET_DIGESTSGET_CERTIFICATECHALLENGEGET_MEASUREMENTSGET_CSRSET_CERTIFICATECHUNK_SENDCHUNK_GET

Requesters and responders may implement the following recommended spec optional commands:

- Events

GET_SUPPORTED_EVENT_TYPESSUBSCRIBE_EVENT_TYPESSEND_EVENT

- Encapsulated requests

GET_ENCAPSULATED_REQUESTDELIVER_ENCAPSULATED_RESPONSE

GET_KEY_PAIR_INFOSET_KEY_PAIR_INFOKEY_UPDATEKEY_EXCHANGEFINISHPSK_EXCHANGEPSK_FINISH

All other spec optional commands may be implemented as the integrator sees fit for their use case.

Required Capabilities

CERT_CAP(required forGET_CERTIFICATE)CHAL_CAP(required forCHALLENGE)MEAS_CAP(required forGET_MEASUREMENT)MEAS_FRESH_CAP

Algorithms

The following cryptographic algorithms are accepted for use within OpenPRoT, but may be further constrained by hardware capabilities. At a minimum OpenPRoT hardware must support:

TPM_ALG_ECDSA_ECC_NIST_P384TPM_ALG_SHA3_384

All others are optional and may be used if supported.

- Asymmetric

TPM_ALG_ECDSA_ECC_NIST_P256TPM_ALG_ECDSA_ECC_NIST_P384EdDSA ed25519EdDSA ed448TPM_ALG_SHA_384

- Hash

TPM_ALG_SHA_256TPM_ALG_SHA_384TPM_ALG_SHA_512TPM_ALG_SHA3_256TPM_ALG_SHA3_384TPM_ALG_SHA3_512

- AEAD Cipher

AES-128-GCMAES-256-GCMCHACHA20_POLY1305

Attestation Report Format

Devices will support either RATS EAT (as CWT) or an SPDM evidence manifest TOC per the TCG DICE Concise Evidence for SPDM specification.

Measurement block 0xF0

Devices that do not provide a Measurement Manifest shall locate RATS EAT at SPDM measurement block 0xF0

PLDM

OpenPRoT devices support the Platform Level Data Model (PLDM) as a responder for firmware updates and platform monitoring. This entails responding to messages of Type 0 (Base), Type 2 (Platform Monitoring and Control), and Type 5 (Firmware Update).

PLDM Base Specifications

Type 0 - Base Specification

- Purpose: Base Specification and Initialization

- Version: 1.2.0

- Specification: PLDM Base Specification

All responders must implement the following mandatory PLDM commands:

GetTIDGetPLDMVersionGetPLDMTypesGetPLDMCommands

All responders must also implement the following optional command:

SetTID

Type 2 - Platform Monitoring and Control

- Purpose: Platform Monitoring and Control

- Version: 1.3.0

- Specification: PLDM for Platform Monitoring and Control

OpenPRoT supports PLDM Monitoring and Control by providing a Platform Descriptor Record (PDR) repository to a prospective PLDM manageability access point discovery agent. These PDRs are defined in JSON files and included in OpenPRoT at build time, with no support for dynamic adjustments. The PDRs are limited to security features and will only support PLDM sensors, not effectors.

PLDM Monitoring PDRs

- Terminus Locator PDR

- Numeric Sensor PDR

Type 5 - Firmware Update

- Purpose: Firmware Update

- Version: 1.3.0

- Specification: PLDM for Firmware Update

Required Inventory Commands

QueryDeviceIdentifiersGetFirmwareParameters

Required Update Commands

RequestFirmwareUpdatePassComponentTableUpdateComponentTransferCompleteVerifyCompleteApplyCompleteActivateFirmwareGetStatus

All responders must also implement the following optional commands:

GetPackageDataGetPackageMetaData

Services

The OpenPRoT services layer provides a standardized set of security and management functions that are essential for a Platform Root of Trust (PRoT). Built on top of the middleware and Hardware Abstraction Layer (HAL), this layer offers high-level capabilities that applications can leverage to ensure system integrity, security, and reliability.

The following services are defined in the OpenPRoT specification:

- Firmware Update

- Attestation

- Firmware Recovery (TBD)

- Secure Boot (TBD)

- Policy Management (TBD)

Attestation

1. Introduction

1.1 OpenPRoT Attestation Components

The OpenPRoT firmware stack provides the following attestation capabilities:

SPDM Responder: Enables external relying parties to establish trust in OpenPRoT by:

- Responding to attestation requests over SPDM protocol

- Providing cryptographically signed evidence about platform state

- Supporting both initial trust establishment and periodic re-attestation

- Enabling secure session establishment with authenticated endpoints

SPDM Requester: Enables OpenPRoT to establish trust in other platform components by:

- Requesting attestation evidence from downstream devices

- Verifying device identities and configurations

- Establishing secure sessions with attested devices

- Supporting platform composition attestation

Local Verifier: Enables on-platform verification of attestation evidence by:

- Appraising evidence from platform components without external connectivity

- Supporting air-gapped and latency-sensitive deployments

- Enforcing platform-specific security policies

- Making local trust decisions for platform operations

Note: While OpenPRoT includes a local verifier component, verification can also be performed remotely by external verifiers. The choice between local and remote verification depends on deployment requirements, connectivity constraints, and security policies.

2. Scope and Purpose

2.1 Scope

This specification covers the attestation capabilities provided by the OpenPRoT firmware stack:

In Scope:

- SPDM Responder Implementation: How OpenPRoT responds to external attestation requests

- SPDM Requester Implementation: How OpenPRoT requests attestation from platform devices

- Local Verifier Architecture: On-platform evidence appraisal capabilities

- Evidence Generation: How OpenPRoT firmware collects and reports platform measurements

- Evidence Formats: Standardized structures for conveying attestation claims (OCP RATS EAT, Concise Evidence)

- Protocol Bindings: SPDM protocol integration and message flows

- Device Identity Provisioning: Owner identity provisioning workflows

- Reference Value Integration: How OpenPRoT uses CoRIM for verification

- Plugin Architecture: Extensibility for non-OCP evidence formats

2.2 Out of Scope

This specification does not cover:

Hardware-Specific Details:

- PRoT Hardware Implementations: Specific hardware designs, architectures, and capabilities

- Manufacturing Provisioning: Secret provisioning into hardware (vendor-specific)

- Hardware Root of Trust Mechanisms: Boot ROM implementation, key derivation, measurement collection at hardware level

- Attester Composition: Layered measurement and key derivation (hardware-dependent)

- HAL Trait Implementations: Specific implementations of HAL traits for particular hardware platforms (integrator responsibility)

Note on Hardware Variance: OpenPRoT is a software stack that operates on top of PRoT hardware. The security strength and attestation capabilities of an OpenPRoT-based system depend significantly on the underlying hardware implementation. Hardware vendors must document their specific:

- Root of trust initialization and measurement mechanisms

- Key derivation and protection approaches

- Certificate chain structures

- Cryptographic capabilities and algorithms

- Isolation and protection boundaries

Other Out of Scope Items:

- OpenPRoT Firmware Implementation Details: Internal firmware architecture (covered in OpenPRoT project documentation)

- Application-level Attestation Policies: Use-case specific verification policies

- Cryptographic Algorithm Specifications: Defers to NIST and industry standards

- Remote Verifier Implementation: External verifier systems (though evidence format is specified)

- Reference Value Provider Services: CoRIM generation and distribution infrastructure

- Transport Layer Details: Physical/link layer protocols (I2C, I3C, MCTP, etc.)

3. Normative References

The following standards are normatively referenced in this specification:

3.1 IETF Specifications

- RFC 9334: Remote ATtestation procedureS (RATS) Architecture

- RFC 9711: Entity Attestation Token (EAT)

- CoRIM: Concise Reference Integrity Manifest (IETF Draft)

- RFC 8949: Concise Binary Object Representation (CBOR)

- RFC 9052: CBOR Object Signing and Encryption (COSE)

- RFC 5280: Internet X.509 Public Key Infrastructure Certificate and CRL Profile

3.2 TCG Specifications

- DICE Layering Architecture: Device Identity Composition Engine

- DICE Attestation Architecture: Certificate-based attestation

- DICE Protection Environment (DPE): Runtime attestation service

- TCG DICE Concise Evidence Binding for SPDM: Evidence format specification

3.3 DMTF Specifications

- DSP0274: Security Protocol and Data Model (SPDM) Version 1.3 or later

- DSP0277: Secured Messages using SPDM over MCTP Binding

- DSP0236: Management Component Transport Protocol (MCTP) Base Specification

3.4 Other Standards

- NIST FIPS 186-5: Digital Signature Standard (DSS)

- NIST SP 800-90A: Recommendation for Random Number Generation

- NIST SP 800-108: Recommendation for Key Derivation Functions

4. Terminology and Definitions

4.1 Attestation Roles

Following IETF RATS RFC 9334, the OpenPRoT attestation architecture defines the following roles:

Attester: An entity (OpenPRoT firmware and associated platform components) that produces attestation evidence about its state and configuration.

Relying Party: An entity that depends on the validity of attestation evidence to make operational decisions. In OpenPRoT deployments, this is typically:

- External platform owner or management system (for initial trust establishment)

- Platform management controller (for periodic verification)

- Cloud service provider infrastructure (for fleet management)

Verifier: An entity that appraises attestation evidence against reference values and policies to produce attestation results. OpenPRoT supports:

- Local Verifier: Running within OpenPRoT firmware for on-platform verification

- Remote Verifier: External system performing verification (implementation not specified here)

Endorser: An entity that vouches for the authenticity and properties of attestation components. For OpenPRoT:

PRoT Hardware: The underlying hardware platform that provides the root of trust capabilities (secure boot, cryptographic acceleration, isolated execution, OTP storage).

SPDM Responder Role: OpenPRoT acting as an SPDM responder to provide attestation evidence to external requesters.

SPDM Requester Role: OpenPRoT acting as an SPDM requester to obtain attestation evidence from platform devices.

Local Verifier: The verification component within OpenPRoT that appraises evidence from platform devices without requiring external connectivity.

Platform Composition: The complete set of attested components including OpenPRoT and downstream devices.

4.3 Key Attestation Terms

Root of Trust (RoT): The foundational hardware and immutable firmware that serves as the trust anchor for the platform. In OpenPRoT context, this is the PRoT hardware's boot ROM.

Compound Device Identifier (CDI): A cryptographic secret derived from measurements and used as the basis for key derivation in DICE.

Target Environment: A uniquely identifiable component or configuration that is measured and attested. In OpenPRoT:

- OpenPRoT firmware components (bootloader, runtime firmware)

- Hardware configurations (fuse settings, security configurations)

- Platform devices (when acting as SPDM requester)

TCB (Trusted Computing Base): The set of components that must be trusted for the security properties of a system to hold.

Evidence: Authenticated claims about platform state produced by the Attester. OpenPRoT generates evidence in multiple formats:

- DICE certificates with TCBInfo extensions

- TCG Concise Evidence

- RATS Entity Attestation Token (EAT)

Reference Values: Known-good measurements provided by the Reference Value Provider for comparison during verification. Typically distributed as CoRIM (Concise Reference Integrity Manifest).

Endorsement: Authenticated statements about device properties or certifications.

Appraisal Policy: Rules used by the Verifier to evaluate evidence against reference values.

Freshness: Property ensuring that evidence represents current platform state, typically achieved through nonces or timestamps.

4.4 DICE/DPE Terms

UDS (Unique Device Secret): A hardware-unique secret provisioned during manufacturing, stored in OTP/fuses, used as the root secret for DICE key derivation.

IDevID (Initial Device Identity): The manufacturer-provisioned device identity derived from UDS.

LDevID (Local Device Identity): An operator-provisioned device identity that can be used in place of IDevID.

Alias Key: A DICE-derived key that represents a specific layer in the boot chain.

DPE (DICE Protection Environment): A service that extends DICE principles to runtime, allowing dynamic context creation and key derivation.

DPE Context: A chain of measurements representing a specific execution path through the system.

DPE Handle: An identifier for a specific DPE context, used to extend measurements or derive keys.

4.5 SPDM Terms

SPDM Session: An authenticated and optionally encrypted communication channel between SPDM requester and responder.

Measurement Block: A collection of measurements representing a specific component or configuration.

Slot: An SPDM certificate chain storage location (Slot 0-7).

GET_MEASUREMENTS: SPDM command to retrieve attestation measurements.

GET_CERTIFICATE: SPDM command to retrieve certificate chains.

CHALLENGE: SPDM command to request signed evidence with freshness.

5. Attestation Architecture Overview

5.1 RATS Architecture Mapping

OpenPRoT implements the IETF RATS architecture with specific role assignments:

High-Level Flow:

- Relying Party (Platform Owner, CSP, Management System) needs to establish trust in the platform

- Relying Party requests attestation evidence from OpenPRoT via SPDM

- OpenPRoT (Attester) generates evidence containing measurements and claims

- Verifier (Remote or Local) receives evidence and appraises it

- Verifier retrieves reference values and endorsements from Reference Value Provider

- Verifier applies appraisal policy and generates attestation result

- Attestation result is conveyed to Relying Party

- Relying Party makes trust decision based on attestation result

Components:

- Attester: OpenPRoT Firmware Stack + PRoT Hardware

- Verifier: Remote verifier system OR OpenPRoT Local Verifier (for device attestation)

- Relying Party: External management system OR OpenPRoT (when verifying devices)

- Reference Value Provider: OpenPRoT project, hardware vendors, platform integrators

- Endorser: Hardware vendors, OpenPRoT project, platform integrators

5.2 Evidence Format Strategy

OpenPRoT adopts a standardized approach to evidence generation and verification:

5.2.1 OpenPRoT Evidence Generation (SPDM Responder)

When acting as an SPDM Responder, OpenPRoT produces attestation evidence in the following formats:

Primary Evidence Format: RATS EAT with OCP Profile

OpenPRoT generates Entity Attestation Tokens (EAT) following the OCP RATS EAT Attestation Profile. This format provides:

- Standardized container for attestation claims

- CBOR-encoded for efficiency

- COSE-signed for authenticity

- Nonce-based freshness

- TCG Concise Evidence embedded in measurements claim

EAT Structure:

The OpenPRoT EAT follows the OCP RATS EAT Attestation Profile specification. For complete details on the EAT structure, claims, and encoding, see:

https://opencomputeproject.github.io/Security/ietf-eat-profile/HEAD/

Supporting Evidence Formats:

- DICE Certificates with TCBInfo: Certificate chain establishing device identity and boot measurements

- TCG Concise Evidence: Standalone format containing reference-triples for measurements

- SPDM Measurement Blocks: Native SPDM measurement format for basic compatibility

5.2.2 OpenPRoT Evidence Verification (Local Verifier)

When acting as a Local Verifier, OpenPRoT supports multiple evidence formats:

Native Support: OCP RATS EAT Profile

The OpenPRoT Local Verifier natively supports appraisal of evidence in OCP RATS EAT Attestation Profile format. This enables:

- Standardized verification logic for OCP-compliant devices

- Consistent appraisal policy across vendors

- Interoperability with OCP ecosystem devices

- Direct comparison against CoRIM reference values

Verification Process for OCP EAT:

- Validate EAT signature using device certificate chain

- Verify nonce freshness

- Extract Concise Evidence from measurements claim

- Retrieve CoRIM reference values using corim-locator

- Compare evidence reference-triples against CoRIM reference-triples

- Apply appraisal policy

- Generate attestation result

Extended Support: Evidence Format Plugins

To accommodate diverse platform ecosystems, OpenPRoT includes an extensibility mechanism for non-OCP-compliant evidence formats:

Plugin Architecture:

- Evidence Parser Plugins: Parse vendor-specific evidence formats

- Claim Extractor Plugins: Extract measurements and claims from proprietary formats

- Policy Adapter Plugins: Map vendor-specific claims to OpenPRoT appraisal policies

Use Cases for Plugins:

- Legacy devices with proprietary attestation formats

- Vendor-specific evidence structures not yet migrated to OCP profile

- Specialized evidence formats for specific device classes

- Transitional support during ecosystem migration to OCP standards

Plugin Interface Requirements:

Plugins must implement the following interfaces:

- parse_evidence(): Convert vendor format to internal representation

- extract_claims(): Extract target environments and measurements

- validate_signature(): Verify evidence authenticity

- get_reference_values(): Retrieve or map to reference values

- apply_policy(): Execute appraisal logic

Plugin Integration:

Platform integrators can add custom plugins to OpenPRoT's local verifier to support their specific device ecosystem while maintaining the core OCP-compliant verification path for standard devices.

5.3 OpenPRoT Dual Role Architecture

OpenPRoT operates in two distinct attestation roles depending on the interaction:

5.3.1 OpenPRoT as Attester (SPDM Responder)

When external relying parties need to establish trust in OpenPRoT:

Flow:

- External Relying Party (SPDM Requester) initiates SPDM session

- SPDM version negotiation and capability exchange

- Algorithm negotiation

- Certificate chain retrieval (GET_CERTIFICATE)

- Measurement request (GET_MEASUREMENTS) with nonce

- OpenPRoT (SPDM Responder) generates EAT with OCP profile

- OpenPRoT returns signed EAT containing Concise Evidence

- Verifier (remote) appraises evidence against reference values

- Attestation result returned to Relying Party

Evidence Provided by OpenPRoT:

- Certificate chain (structure determined by underlying PRoT hardware implementation)

- RATS EAT with OCP Profile containing:

- TCG Concise Evidence with reference-triples

- Freshness nonce

- CoRIM locator URI

- COSE signature using attestation key provided by underlying hardware

Note on Hardware Dependencies:

The certificate chain structure and attestation key derivation mechanisms are determined by the underlying PRoT hardware implementation and are outside the scope of OpenPRoT firmware. OpenPRoT leverages the attestation capabilities provided by the hardware platform. Hardware vendors should document their specific:

- Certificate chain structure and hierarchy

- Key derivation mechanisms

- Supported cryptographic algorithms

- Identity provisioning approach

Use Cases:

- Initial platform deployment and trust establishment

- Periodic re-attestation for fleet management

- Pre-workload-deployment verification

- Compliance auditing

5.3.2 OpenPRoT as Verifier (SPDM Requester + Local Verifier)

When OpenPRoT needs to establish trust in platform devices:

Flow:

- OpenPRoT (SPDM Requester) initiates SPDM session with platform device

- SPDM version negotiation and capability exchange

- Algorithm negotiation

- Certificate chain retrieval from device (GET_CERTIFICATE)

- Measurement request (GET_MEASUREMENTS) with nonce

- Platform Device (SPDM Responder) returns evidence

- OpenPRoT Local Verifier receives evidence

- If OCP EAT format: Native verification path

- If non-OCP format: Plugin-based verification path

- Local Verifier appraises evidence against reference values

- Local trust decision made by OpenPRoT

- Result used for platform composition decisions

Standard Measurement Report:

OpenPRoT follows the SPDM Standard Measurement Report format for evidence collection from devices. This standardized approach ensures consistent evidence structure across different device types and vendors.

For complete details on the Standard Measurement Report format, see:

https://github.com/steven-bellock/libspdm/blob/96d08a730ecbe3f05fa3a2cdbf0b7c2613b24a2f/doc/standard_measurement_report.md

Evidence Received by OpenPRoT:

- Device certificate chain (structure varies by device implementation)

- Device evidence (OCP EAT preferred, plugin-supported formats allowed)

- Device measurements and claims in Standard Measurement Report format

Verification Paths:

- OCP-Compliant Devices: Direct verification using native OCP EAT verifier

- Non-OCP Devices: Plugin-based parsing and verification

- Hybrid Platforms: Mix of OCP and non-OCP devices verified appropriately

Use Cases:

- Verifying network cards, storage controllers, accelerators, soc

- Establishing trust in platform composition

- Air-gapped deployments without external verifier access

- Real-time device trust decisions

5.4 Attestation Flow

The basic attestation flow follows these steps:

Phase 1: Measurement Collection (Boot Time)

- PRoT Hardware Boot ROM (immutable) starts execution

- Boot ROM measures OpenPRoT bootloader (First Mutable Code)

- Hardware-specific key derivation and certificate generation occurs

- Control transfers to OpenPRoT bootloader

- Bootloader measures OpenPRoT runtime firmware

- Hardware-specific measurement chain continues

- Control transfers to OpenPRoT runtime firmware

- Runtime firmware initializes attestation services

- Runtime firmware measures platform components (optional)

Note: The specific measurement and key derivation mechanisms in steps 3 and 6 are hardware-dependent and outside the scope of OpenPRoT firmware.

Phase 2: Evidence Generation (On Request)

- External requester initiates SPDM session with OpenPRoT

- OpenPRoT SPDM Responder receives attestation request (GET_MEASUREMENTS)

- OpenPRoT collects current measurements from platform state

- OpenPRoT formats measurements as TCG Concise Evidence (reference-triples)

- OpenPRoT constructs RATS EAT with OCP Profile:

- Sets issuer to OpenPRoT identifier

- Includes requester-provided nonce

- Embeds Concise Evidence in measurements claim

- Adds CoRIM locator URI

- OpenPRoT signs EAT using hardware-provided attestation key (COSE signature)

- OpenPRoT returns EAT and certificate chain to requester

Phase 3: Evidence Conveyance

- SPDM Responder transmits evidence via SPDM protocol

- Evidence includes:

- Certificate chain (for signature verification)

- Signed EAT (containing measurements)

- Optional: Additional endorsements

- Transport layer delivers evidence to verifier

Phase 4: Reference Value Retrieval

- Verifier extracts CoRIM locator from EAT

- Verifier retrieves reference values CoRIM from repository

- Verifier retrieves endorsements (device identity, certifications)

- Verifier validates CoRIM signatures

- Verifier loads appraisal policy

Phase 5: Appraisal

- Verifier validates EAT signature using certificate chain

- Verifier checks certificate chain to trusted root

- Verifier verifies nonce freshness

- Verifier extracts Concise Evidence from EAT measurements claim

- Verifier parses reference-triples from Concise Evidence

- For each target environment in evidence:

- Compare against CoRIM reference values

- Check measurements match expected values

- Verify SVN meets minimum requirements

- Apply policy rules

- Verifier generates attestation result

Phase 6: Trust Decision

- Attestation result conveyed to Relying Party

- Relying Party evaluates result against requirements

- Relying Party makes operational decision:

- Accept platform for use

- Reject platform

- Request additional evidence

- Apply restricted usage policy

5.5 Trust Model

OpenPRoT's attestation architecture relies on the following trust assumptions:

5.5.1 Hardware Trust Anchor

Trusted Components:

- PRoT Hardware Boot ROM (immutable code)

- Hardware cryptographic accelerators

- OTP/Fuse storage for device secrets

- Hardware isolation mechanisms

Assumptions:

- Boot ROM is free from vulnerabilities

- Hardware random number generation is cryptographically secure

- Secrets in OTP/fuses cannot be extracted

- Hardware isolation prevents unauthorized access to secrets

Hardware-Specific Trust:

The specific trust properties and security guarantees are determined by the underlying PRoT hardware implementation. Hardware vendors must document:

- Root of trust initialization process

- Secret storage mechanisms

- Key derivation approach

- Isolation boundaries

- Cryptographic capabilities

5.5.2 Firmware Trust Chain

Trust Establishment:

- Boot ROM measures and authenticates OpenPRoT bootloader

- Bootloader measures and authenticates OpenPRoT runtime

- Each layer's measurements are recorded

- Compromise of any layer results in detectable measurement changes

Properties:

- Measurements cannot be forged without detection

- Certificate chain provides cryptographic proof of boot integrity

- Hardware-specific key binding ensures authenticity

OpenPRoT Scope:

OpenPRoT firmware operates within the trust chain established by the underlying hardware. The firmware:

- Collects and reports measurements

- Generates evidence in standardized formats

- Implements SPDM responder and requester roles

- Provides local verification capabilities

The underlying measurement and key derivation mechanisms are hardware-dependent.

5.5.3 Cryptographic Trust

Cryptographic Assumptions:

- Digital signatures cannot be forged without private key

- Hash collisions are computationally infeasible

- Key derivation functions provide one-way security

- COSE signature scheme provides authenticity and integrity

Key Protection: Threat: Attacker provides false reference values to verifier

Mitigation:

- CoRIM signed by trusted authority

- Verifier validates CoRIM signature before use

- Secure distribution channels for reference values

- Verifier configured with trusted root certificates

5.6.7 Man-in-the-Middle Attacks

Threat: Attacker intercepts and modifies attestation messages

Mitigation:

- SPDM secure sessions provide encryption and authentication

- Evidence signed by device prevents modification

- Certificate-based mutual authentication

- Integrity protection on all messages

5.6.8 Plugin Exploitation

Threat: Attacker provides false reference values to verifier

Mitigation:

- CoRIM signed by trusted authority

- Verifier validates CoRIM signature before use

- Secure distribution channels for reference values

- Verifier configured with trusted root certificates

5.6.7 Man-in-the-Middle Attacks

Threat: Attacker intercepts and modifies attestation messages

Mitigation:

- SPDM secure sessions provide encryption and authentication

- Evidence signed by device prevents modification

- Certificate-based mutual authentication

- Integrity protection on all messages

5.7 Device Identity Provisioning

OpenPRoT supports flexible device identity provisioning to accommodate different deployment models and ownership scenarios.

5.7.1 Identity Types

Manufacturer-Provisioned Identity:

- Provisioned by hardware manufacturer during production

- Rooted in hardware-unique secrets

- Provides vendor attestation anchor

- Permanent identity tied to hardware

Owner-Provisioned Identity:

- Provisioned by platform owner during deployment

- Enables owner-controlled attestation anchor

- Supports organizational PKI integration

- Can be updated by authorized owner

5.7.2 Owner Identity Provisioning with OpenPRoT

OpenPRoT implements the OCP Device Identity Provisioning specification to enable platform owners to provision owner-controlled identities to devices under their control. OpenPRoT acts as the intermediary between the owner and the device, facilitating secure identity provisioning.

Provisioning Process:

The owner identity provisioning follows the standardized flow defined in the OCP specification:

-

Owner Initiates Provisioning: Owner uses OpenPRoT to begin owner identity provisioning process

-

CSR Collection: OpenPRoT collects Certificate Signing Request (CSR) from the target device

- Device generates identity key pair internally

- Device creates CSR containing public key

- OpenPRoT retrieves CSR from device

-

Trust Establishment: OpenPRoT establishes trust in the device's identity key

- Verifies device's manufacturer-provisioned identity certificate chain

- Validates that CSR is signed by device

- Confirms key is hardware-protected

- Provides attestation evidence to owner

-

Endorsement Generation: Owner generates identity endorsement

- Owner reviews device attestation evidence

- Owner verifies device trustworthiness

- Owner signs CSR with owner CA

- Owner creates identity certificate

-

Endorsement Provisioning: OpenPRoT provisions the endorsement to the device

- OpenPRoT receives signed identity certificate from owner

- OpenPRoT provisions identity certificate to device

- Device validates and stores identity certificate

-

Verification: OpenPRoT verifies successful provisioning

- Requests device to use owner-provisioned identity for attestation

- Validates identity certificate chain

- Confirms device can sign with identity key

OpenPRoT's Role:

- CSR Broker: Retrieves CSRs from devices

- Trust Validator: Verifies device identity and key protection before owner endorsement

- Provisioning Agent: Delivers owner-signed certificates to devices

- Verification Service: Confirms successful identity provisioning

Benefits:

- Owner Control: Platform owners control their attestation trust anchor

- PKI Integration: Enables integration with organizational PKI infrastructure

- Privacy: Owner-provisioned identity can provide privacy from manufacturer tracking

- Flexibility: Supports diverse deployment and ownership models

- Automated Workflow: OpenPRoT automates the provisioning process

Specification Reference:

For complete details on the device identity provisioning process, see:

https://opencomputeproject.github.io/Security/device-identity-provisioning/HEAD/

6. Claims and Target Environments

TODO: Define OpenPRoT-specific claims and target environment structures

7. Evidence Formats

TODO: Detail evidence format specifications for OpenPRoT

8. Reference Values and Endorsements

TODO: Describe reference value and endorsement mechanisms

9. SPDM Protocol Integration

TODO: Specify SPDM protocol bindings and requirements

10. Local Verifier

TODO: Define local verifier architecture and capabilities

11. Attestation Use Cases

TODO: Document common attestation scenarios and workflows

12. Security Considerations

TODO: Additional security considerations beyond threat model

13. Implementation Guidelines

TODO: Guidance for implementers of OpenPRoT attestation

Firmware Update

Status: Draft

Overview

This section details the OpenPRoT firmware update mechanism, incorporating the DMTF standards for PLDM and SPDM, while emphasizing the security and resilience principles of the project.

Goals

- To provide a secure and reliable method for updating OpenPRoT firmware.

- To ensure that firmware updates are authenticated and authorized.

- To provide a recovery mechanism in the event of a failed update.

- To align with industry standards for firmware updates (PLDM, SPDM).

Use Cases

- Updating the OpenPRoT firmware itself.

- Updating the firmware of downstream devices managed by OpenPRoT.

- Applying critical security updates and bug fixes.

- Updating firmware to enable new features.

PLDM for Firmware Update

OpenPRoT devices will support PLDM Type 5 version 1.3.0 for Firmware Updates. This will be the primary mechanism for transferring firmware images and metadata to the device. PLDM provides a standardized method for managing firmware updates and is particularly well-suited for out-of-band management scenarios.

PLDM Firmware Update Package

The firmware update package is essential for conveying the information required for the PLDM Firmware Update commands.

Package Header

The package will contain a header that describes the contents of the firmware update package, including:

- Overall packaging version and date.

- Device identifier records to specify the target OpenPRoT devices.

- Downstream device identifier records to describe target downstream devices.

- Component image information, including classification, offset, size, and version.

- A checksum for integrity verification.

- Package Payload: Contains the actual firmware component images to be updated

Package Header Information

| Field | Size (bytes) | Definition |

|---|---|---|

| PackageHeaderIdentifier | 16 | Set to 0x7B291C996DB64208801B0202E6463C78 (v1.3.0 UUID) (big endian) |

| PackageHeaderFormatRevision | 1 | Set to 0x04 (v1.3.0 header format revision) |

| PackageHeaderSize | 2 | The total byte count of this header structure, including fields within the Package Header Information, Firmware Device Identification Area, Downstream Device Identification Area, Component Image Information Area, and Checksum sections. |

| PackageReleaseDateTime | 13 | The date and time when this package was released in timestamp104 formatting. Refer to the PLDM Base Specification for field format definition. |

| ComponentBitmapBitLength | 2 | Number of bits used to represent the bitmap in the ApplicableComponents field for a matching device. This value is a multiple of 8 and is large enough to contain a bit for each component in the package. |

| PackageVersionStringType | 1 | The type of string used in the PackageVersionString field. Refer to DMTF Firmware Update Specification v.1.3.0 Table 33 for values. |

| PackageVersionStringLength | 1 | Length, in bytes, of the PackageVersionString field. |

| PackageVersionString | Variable | Package version information, up to 255 bytes. Contains a variable type string describing the version of this firmware update package. |

| DeviceIDRecordCount | uint8 | The count of firmware device ID records that are defined within this package. |

| FirmwareDeviceIDRecords | Variable | Contains a record, a set of descriptors, and optional package data for each firmware device within the count provided from the DeviceIDRecordCount field. |

Firmware Device ID Descriptor

| Field | Size (bytes) | Definition |

|---|---|---|

| RecordLength | 2 | The total length in bytes for this record. The length includes the RecordLength, DescriptorCount, DeviceUpdateOptionFlags, ComponentImageSetVersionStringType, ComponentSetVersionStringLength, FirmwareDevicePackageDataLength, ApplicableComponents, ComponentImageSetVersionString, and RecordDescriptors, and FirmwareDevicePackageData fields. |

| DescriptorCount | 1 | The number of descriptors included within the RecordDescriptors field for this record. |

| DeviceUpdateOptionFlags | 4 | 32-bit field where each bit represents an update option. bit 0 is set to 1 (Continue component updates after failure). |

| ComponentImageSetVersionStringType | 1 | The type of string used in the ComponentImageSetVersionString field. Refer to DMTF Firmware Update Specification v.1.3.0 Table 33 for values. |

| ComponentImageSetVersionStringLength | 1 | Length, in bytes, of the ComponentImageSetVersionString. |

| FirmwareDevicePackageDataLength | 2 | Length in bytes of the FirmwareDevicePackageData field. If no data is provided, set to 0x0000. |

| ReferenceManifestLength | 4 | Length in bytes of the ReferenceManifestData field. If no data is provided, set to 0x00000000. |

| ApplicableComponents | Variable | Bitmap indicating which firmware components apply to devices matching this Device Identifier record. A set bit indicates the Nth component in the payload is applicable to this device. bit 0: OpenPRoT RT Image bit 1: Downstream SoC Manifest bit 2 : Downstream SoC Firmware bit 3:: Downstream EEPROM |

| ComponentImageSetVersionString | Variable | Component Image Set version information, up to 255 bytes. Describes the version of component images applicable to the firmware device indicated in this record. |

| RecordDescriptors | Variable | These descriptors are defined by the vendor. Refer to DMTF Firmware Update Specification v.1.3.0 Table 7 for details of these fields and the values that can be selected. |

| FirmwareDevicePackageData | Variable | Optional data provided within the firmware update package for the FD during the update process. If FirmwareDevicePackageDataLength is 0x0000, this field contains no data. |

| ReferenceManifestData | Variable | Optional data field containing a Reference Manifest for the firmware update. If present, it describes the firmware update provided by this package. If ReferenceManifestLength is 0x00000000, this field contains no data. |

Downstream Device ID Descriptor

| Field | Size | Definition |

|---|---|---|

| DownstreamDeviceIDRecordCount | 1 | 0 |

Component Image Information

| Field | Size | Definition |

|---|---|---|

| ComponentClassification | 2 | 0x000A: Downstream EEPROM, Downstream SoC Firmware, and OpenPRoT RT Image (Firmware), 0x0001: Downstream SoC Manifest (Other) |

| ComponentIdentifier | 2 | Unique value selected by the FD vendor to distinguish between component images. 0x0001: OpenPRoT RT Image, 0x0002: Downstream SoC Manifest, 0x0003: 0x0003: Downstream EEPROM 0x1000-0xFFFF - Reserved for other vendor-defined SoC images |

| ComponentComparisonStamp | 4 | Value used as a comparison in determining if a firmware component is down-level or up-level. When ComponentOptions bit 1 is set, this field should use a comparison stamp format (e.g., MajorMinorRevisionPatch). If not set, use 0xFFFFFFFF. |

| ComponentOptions | 2 | Refer to ComponentOptions definition in DMTF Firmware Update Specification v.1.3.0 |

| RequestedComponentActivationMethod | 2 | Refer to RequestedComponentActivationMethoddefinition inDMTF Firmware Update Specification v.1.3.0 |

| ComponentLocationOffset | 4 | Offset in bytes from byte 0 of the package header to where the component image begins. |

| ComponentSize | 4 | Size in bytes of the Component image. |

| ComponentVersionStringType | 1 | Type of string used in the ComponentVersionString field. Refer toDMTF Firmware Update Specification v.1.3.0 Table 33 for values. |

| ComponentVersionStringLength | 1 | Length, in bytes, of the ComponentVersionString. |

| ComponentVersionString | Variable | Component version information, up to 255 bytes. Contains a variable type string describing the component version. |

| ComponentOpaqueDataLength | 4 | Length in bytes of the ComponentOpaqueData field. If no data is provided, set to 0x00000000. |

| ComponentOpaqueData | Variable | Optional data transferred to the FD/FDP during the firmware update |

Component Identifiers

| Component Image | Name | Description |

|---|---|---|

| 0x0 | OpenPRoT RT Image | OpenPRoT manifest and firmware images (e.g. BL0, RT firmware). |

| 0x1 | Downstream SoC Manifest | SoC manifest covering firmware images. Used to stage verification of the firmware payload. |

| 0x2 | Downstream SoC Firmware | SoC firmware payload. |

| 0x3 | Downstream EEPROM | Bulk update of downstream EEPROM |

| >= 0x1000 | Vendor defined components |

PLDM Firmware Update Process

The update process will involve the following steps:

- RequestUpdate: The Update Agent (UA) initiates the firmware update by

sending the

RequestUpdatecommand to the OpenPRoT device. We refer to OpenPRoT as the Firmware Device (FD). - GetPackageData: If there is optional package data for the Firmware Device (FD), the UA will transfer it to the FD prior to transferring component images.

- GetDeviceMetaData: The UA may also optionally retrieve FD metadata that will be saved and restored after all components are updated.

- PassComponentTable: The UA will send the

PassComponentTablecommand with information about the component images to be updated. This includes the identifier, component comparison stamp, classification, and version information for each component image. - UpdateComponent: The UA will send the

UpdateComponentcommand for each component, which includes: component classification, component version, component size, and update options. The UA will subsequently transfer component images using theRequestFirmwareDatacommand.. - TransferComplete: After successfully transferring component data, the FD

will send a

TransferCompletecommand. - VerifyComplete: Once a component transfer is complete the FD will perform a verification of the image.

- ApplyComplete: The FD will use the

ApplyCompletecommand to signal that the component image has been successfully applied. - ActivateFirmware: After all components are transferred, the UA sends the

ActivateFirmwarecommand. If self-contained activation is supported, the FD should immediately enable the new component images. Otherwise, the component enters a "pending activation" state which will require a reset to complete the activation. - GetStatus: The UA will periodically use the

GetStatuscommand to detect when the activation process has completed.

For downstream device updates, the UA will use RequestDownstreamDeviceUpdate

to initiate the update sequence on the FDP. The rest of the process is similar,

with the FDP acting as a proxy for the downstream device.

PLDM Firmware Update Error Handling and Recovery

- The PLDM specification defines a set of completion codes for error conditions.

- OpenPRoT will adhere to the timing specifications defined in the PLDM specification (DSP0240 and DSP0267) for command timeouts and retries.

- The

CancelUpdateComponentcommand is available to cancel the update of a component image, and theCancelUpdatecommand can be used to exit from update mode. The UA should attempt to complete the update and avoid cancelling if possible. - OpenPRoT devices will implement a dual-bank approach for firmware

components. This will allow for a fallback to a known-good firmware image in

case of a failed update. If a power loss occurs prior to the

ActivateFirmwarecommand, the FD will continue to use the currently active image, and the UA can restart the firmware update process.

Device Abstraction

Status: Draft

The OpenPRoT Driver Development Kit (Device Development Kit) provides a set of generic Rust traits and types for interacting with I/O peripherals and cryptographic algorithm accelerators encountered in the class of devices that perform Root of Trust (RoT) functions.

The DDK isolates the OpenPRoT developer from the underlying embedded processor and operating system.

Scope

This section provides a non-exhaustive list of peripherals that fall within the scope of the Device Driver Kit (DDK).

I/O Peripherals

| Device | Description |

|---|---|

| SMBus/I2C Monitor/Filter | |

| Delay | Delay execution for specified durations in microseconds or milliseconds. |

Cryptographic Functions

| Cryptographic Algorithm | Description |

|---|---|

| AES | Symmetric encryption and decryption |

| ECC | ECDSA signature and verification |

| digest | Cryptographic hash functions |

| RSA | RSA signature and verification |

We will refer to the collection of I/O peripherals and cryptographic algorithm accelerators as peripherals from now on.

Design Goals

Platform Agnostic

The goal of the DDK is to provide a consistent and flexible interface for applications to invoke peripheral functionality, regardless of whether the interaction with the underlying peripheral driver is through system calls to a kernel mode device driver, inter-task communication or direct access to memory-mapped peripheral registers.

Execution Model Agnostic

The DDK should be agnostic of the execution model and provide flexibility for its users.

The collection of traits in the DDK is to be segregated in different crates according to the APIs it exposes. i.e. synchronous, asynchronous, and non-blocking APIs.

These crates ensure that DDK can cater to various execution models, making it versatile for different application requirements.

- Synchronous APIs: The main open-prot-ddk crate contains blocking traits where operations are performed synchronously before returning.

- Asynchronous APIs: The open-prot-ddk-async crate provides traits for asynchronous operations using Rust's async/await model.

- Non-blocking APIs: The open-prot-ddk-nb crate offers traits for non-blocking operations which allows for polling-based execution.

Design Principles

Minimalism

The design of the DDK prioritizes simplicity, making it straightforward for developers to implement. By avoiding unnecessary complexity, it ensures that the core traits and functionalities remain clear and easy to understand.

Zero Cost

This principle ensures that using the DDK introduces no additional overhead. In other words, the abstraction layer should neither slow down the system nor consume more resources than direct hardware access.

Composability

The HAL shall be designed to be modular and flexible, allowing developers to easily combine different components. This composability means that various drivers and peripherals can work together seamlessly, making it easier to build complex systems from simple, reusable parts.

Robust Error Handling

Trait methods must be designed to handle potential failures, as hardware interactions can be unpredictable. This means that methods invoking hardware should return a Result type to account for various failure scenarios, including misconfiguration, power issues, or disabled hardware.

#![allow(unused)] fn main() { pub trait SpiRead<W> { type Error; fn read(&mut self, words: &mut [W]) -> Result<(), Self::Error>; } }

While the default approach should be to use fallible methods, HAL implementations can also provide infallible versions if the hardware guarantees no failure. This ensures that generic code can rely on robust error handling, while platform-specific code can avoid unnecessary boilerplate when appropriate.

#![allow(unused)] fn main() { use core::convert::Infallible; pub struct MyInfallibleSpi; impl SpiRead<u8> for MyInfallibleSpi { type Error = Infallible; fn read(&mut self, words: &mut [u8]) -> Result<(), Self::Error> { // Perform the read operation Ok(()) } } }

Separate Control and Data Path Operations

- Clarity: By separating configuration (control path) from data transfer (data path), each part of the code has a clear responsibility. This makes the code easier to understand and maintain.

- Modularity: It allows for more modular design, where control and data handling can be developed and tested independently.

Example

This example is extracted from Tock's TRD3 design document. Uart functionality is decomposed into fine grained traits defined for control path (Configure) and data path operations (Transmit and Receive).

#![allow(unused)] fn main() { pub trait Configure { fn configure(&self, params: Parameters) -> ReturnCode; } pub trait Transmit<'a> { fn set_transmit_client(&self, client: &'a dyn TransmitClient); fn transmit_buffer( &self, tx_buffer: &'static mut [u8], tx_len: usize, ) -> (ReturnCode, Option<&'static mut [u8]>); fn transmit_word(&self, word: u32) -> ReturnCode; fn transmit_abort(&self) -> ReturnCode; } pub trait Receive<'a> { fn set_receive_client(&self, client: &'a dyn ReceiveClient); fn receive_buffer( &self, rx_buffer: &'static mut [u8], rx_len: usize, ) -> (ReturnCode, Option<&'static mut [u8]>); fn receive_word(&self) -> ReturnCode; fn receive_abort(&self) -> ReturnCode; } pub trait Uart<'a>: Configure + Transmit<'a> + Receive<'a> {} pub trait UartData<'a>: Transmit<'a> + Receive<'a> {} }

Use Case : Device Sharing

- Peripheral Client Task: This task is only exposed to data path operations, such as reading from or writing to the peripheral. It interacts with the peripheral server to perform these operations without having direct access to the configuration settings.

- Peripheral Server Task: This task is responsible for managing and sharing the peripheral functionality across multiple client tasks. It has the exclusive role of configuring the peripheral for data transfer operations, ensuring that all configuration changes are centralized and controlled. This separation allows for robust access control and simplifies the management of peripheral settings.

Methodology

In order to accomplish this goal in an efficient fashion the DDK should not try to reinvent the wheel but leverage existing work in the Rust community such as the Embedded Rust Workgroup's embedded-hal or the RustCrypto projects.

As much as possible, the OpenPRoT workgroup should evaluate, curate, and recommend existing abstractions that have already gained wide adoption.

By leveraging well-established and widely accepted abstractions, the DDK can ensure compatibility, reliability, and ease of integration across various platforms and applications. This approach not only saves development time and resources but also promotes standardization and interoperability within the ecosystem.

When abstractions need to be invented, as is the case for the I3C protocol, for instance the OpenPRot workgroup will design it according to the community guidelines for the project it is curating from and make contributions upstream.

Use Cases

This section illustrates the contexts where the DDK can be used.

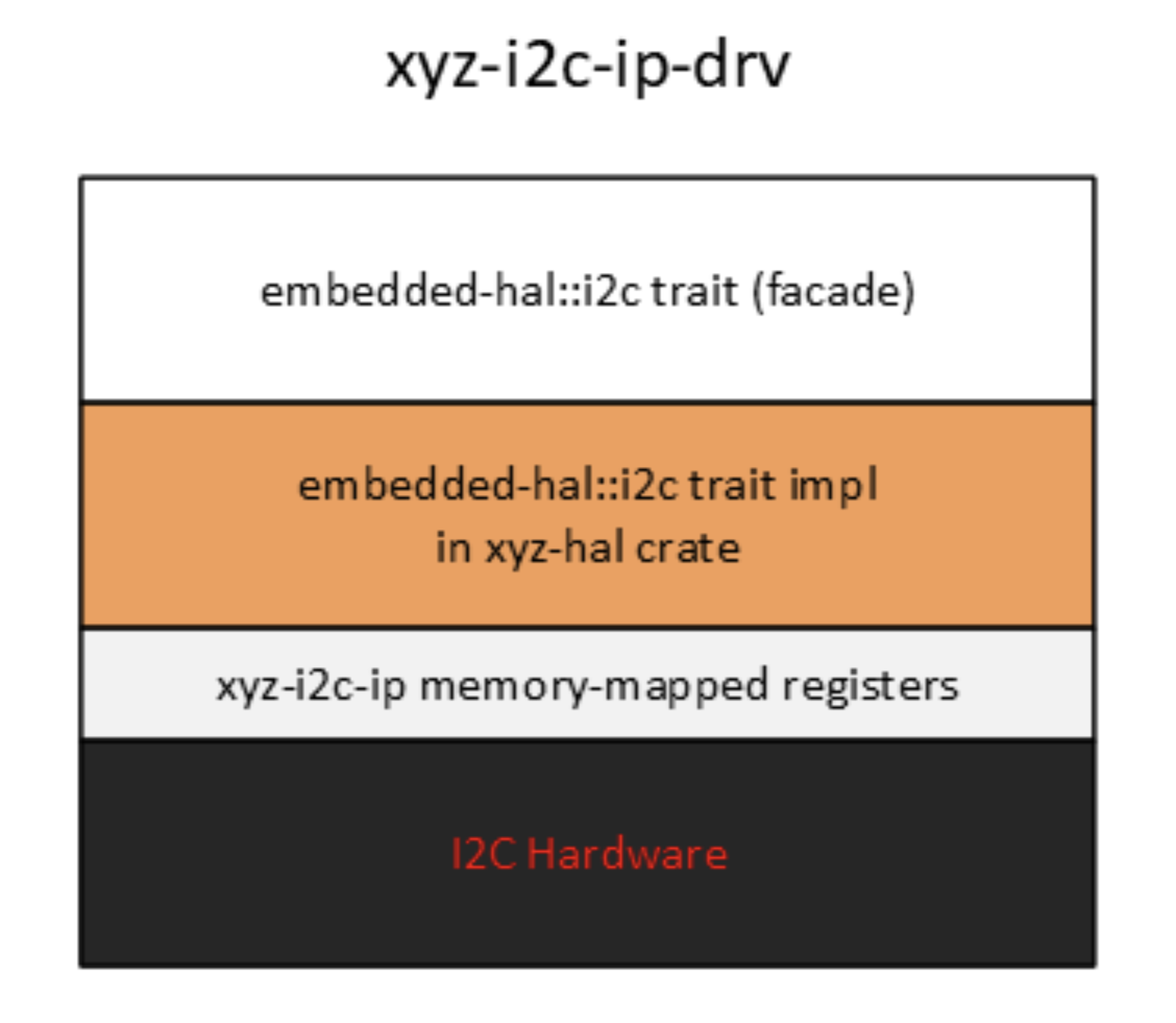

Low Level Driver

A low level driver implements a peripheral (or a cryptographic algorithm) driver

trait by accessing memory mapped registers directly and it is distributed as a

no_std crate.

A no_std crate like the one depicted below would be linked directly into a

user mode task with exclusive peripheral ownership. This use case is encountered

in microkernel-based embedded O/S such as Oxide HUBRIS where drivers run in

unprivileged mode.

Proxy for a Kernel Mode Device Driver

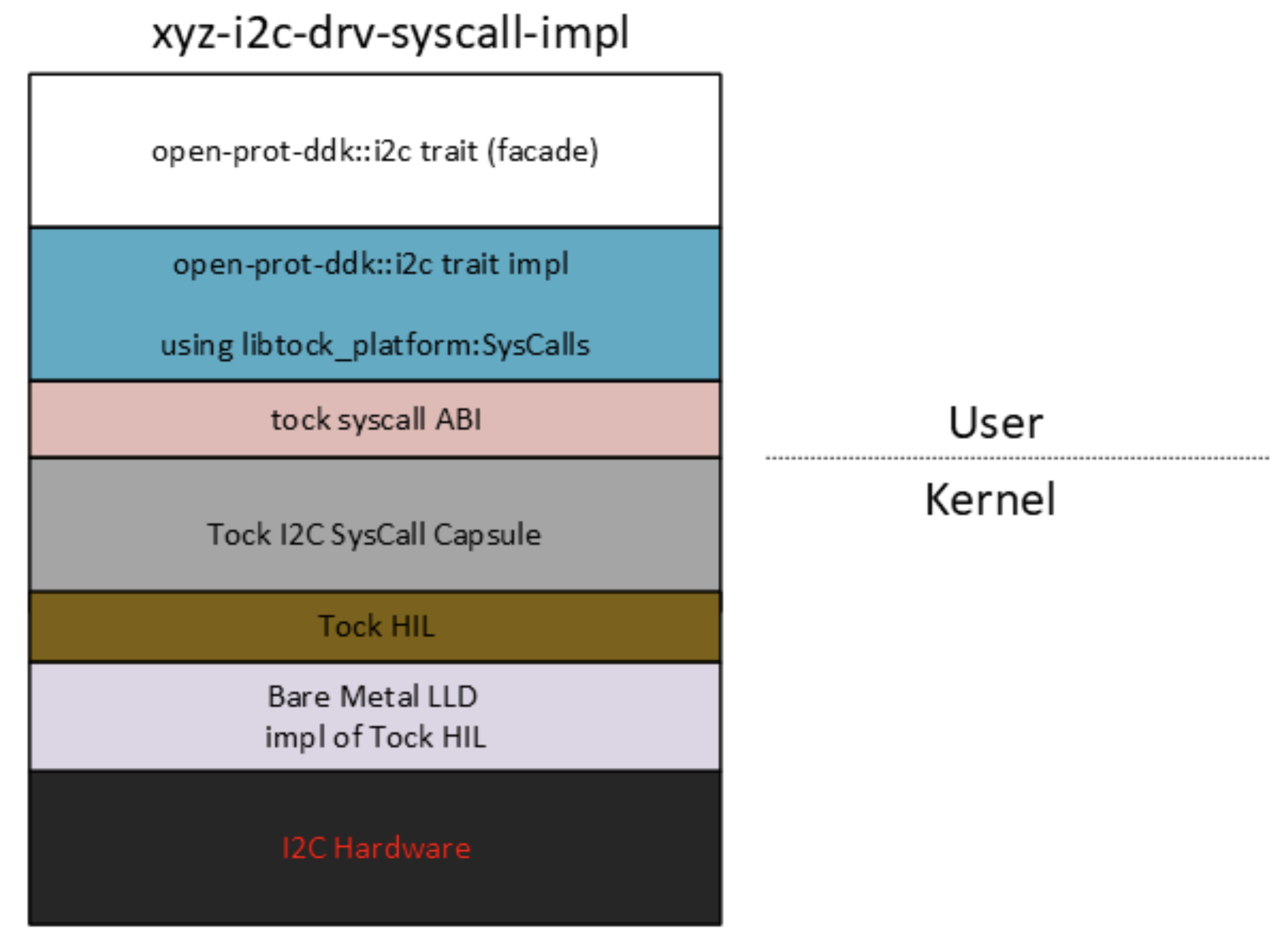

In this section, we explore how a trait from the Device Development Kit (DDK) can enhance portability by decoupling the application writer from the underlying embedded stack.

The user of the peripheral is an application that is interacting with a kernel mode device driver via system calls, but is completely isolated from the underlying implementation.

This is applicable to any O/S with device drivers living in the kernel, like the Tock O/S.

Proxy for a Peripheral Server Task

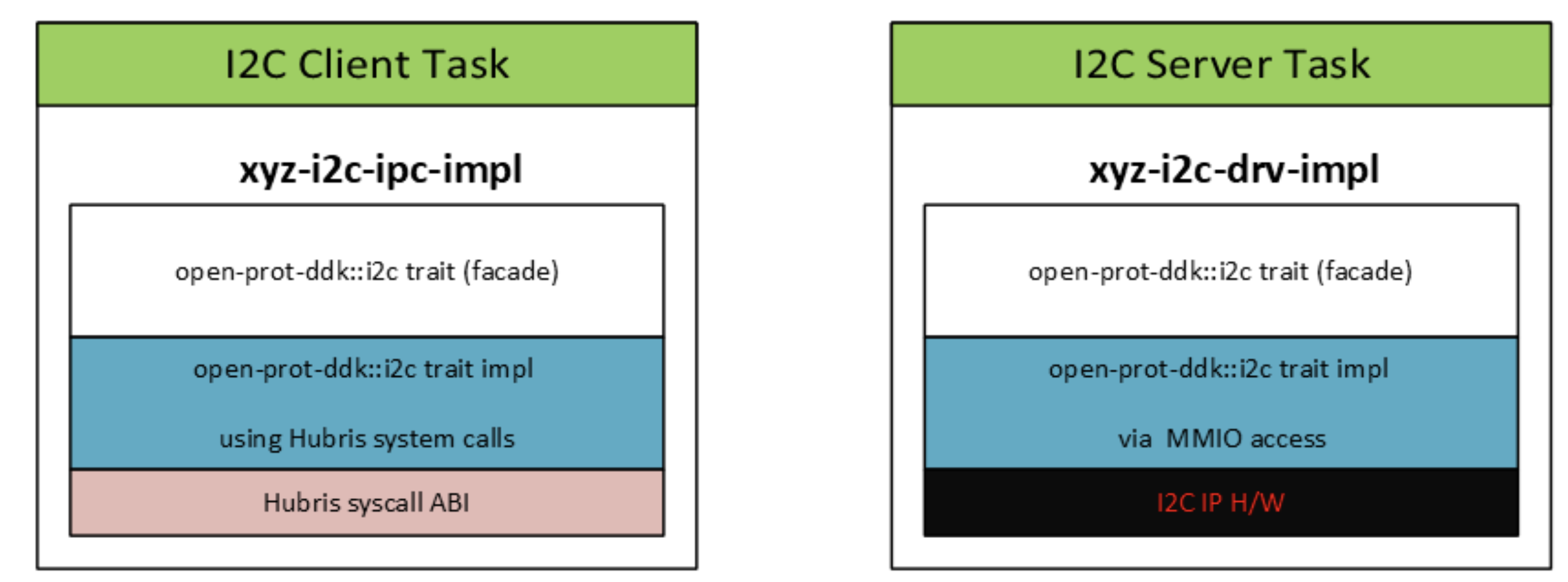

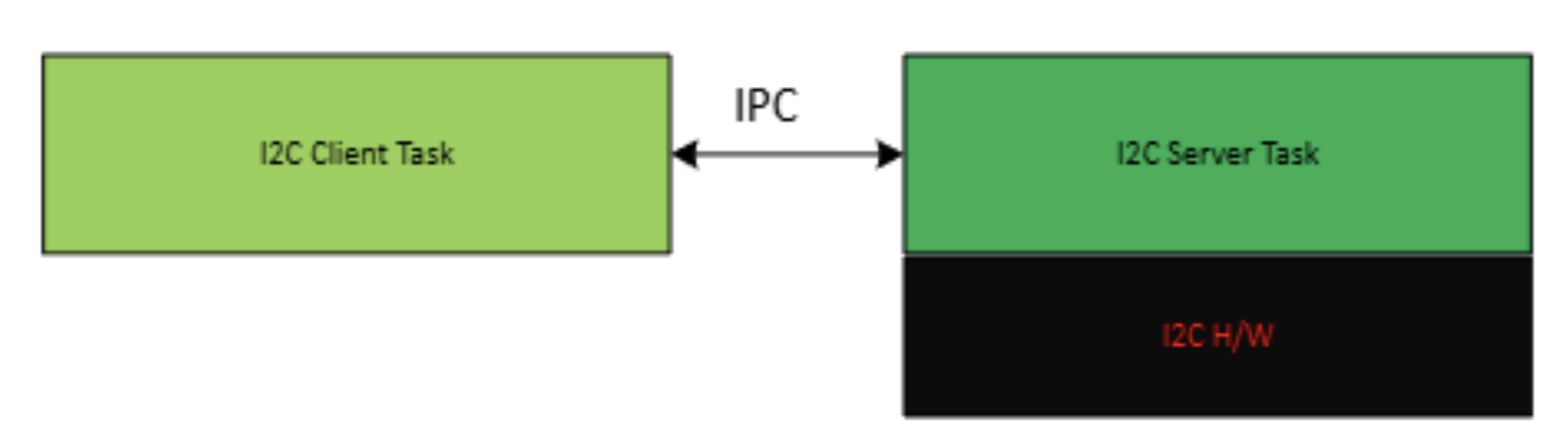

In this section, we will explore once more how traits from the Device Development Kit (DDK) can enhance portability by decoupling the application writer from the underlying Operating System architecture. This scenario is applicable to any microkernel-based O/S

The xyz-i2c-ipc-impl depicted below is distributed as a no_std driver crate

and is linked to a I2C client task. The I2C client task is an application that

is interacting with a user mode device driver, named the I2C server task via

message passing.

The I2C Server task owns the actual peripheral and is linked to a xyz-i2c-drv-imp driver crate, which is a low-level driver. .

The I2C client task sends requests to the I2C peripheral owned by the server task via message passing, completely oblivious to the underlying implementation.

Terminology

The following acronyms and abbreviations are used throughout this document.

| Abbreviation | Description |

|---|---|

| AES | Advanced Encryption Standard |

| BMC | Baseboard Management Controller |

| CA | Certificate Authority |

| CPU | Central Processing Unit |

| CRL | Certificate Revocation List |

| CSR | Certificate Signing Request |

| CSP | Critical Security Parameter |

| DICE | Device Identifier Composition Engine |

| DRBG | Deterministic Random Bit Generator |

| ECDSA | Elliptic Curve Digital Signature Algorithm |

| FMC | FW First Measured Code |

| GPU | Graphics Processing Unit |

| HMAC | Hash-based message authentication code |

| IDevId | Initial Device Identifier |

| iRoT | Internal RoT |

| KAT | Known Answer Test |

| KDF | Key Derivation Function |

| LDevId | Locally Significant Device Identifier |

| MCTP | Management Component Transport Protocol |

| NIC | Network Interface Card |

| NIST | National Institute of Standards and technology |

| OCP | Open Compute Project |

| OTP | One-time programmable |

| PCR | Platform Configuration Register |

| PKI | Public Key infrastructure |

| PLDM | Platform Level Data Model |

| PUF | Physically unclonable function |

| RoT | Root of Trust |

| RTI | RoT for Identity |

| RTM | RoT for Measurement |